The world of brain-machine interfacing (BMI) has a new posterchild. A study on people with tetraplegia, published in Nature, has shown participants were able to control a robotic arm and hand over a broad space without any explicit training.

This builds on advances in BMI research, which have shown people with profound upper-extremity paralysis or limb-loss could use their brain signals to direct useful robotic arm actions.

Findings in this field have previously demonstrated that able-bodied monkeys equipped with electrodes implanted into their brains can control a robotic arm, but until recently it was unknown whether people with profound upper-extremity paralysis or limb-loss could use their brain signals to direct a robotic arm.

The new study by Leigh R. Hochberg of Brown University and colleagues involved something known as the BrainGate neural interfacing system, equipped with a 96-channel microelectrode array.

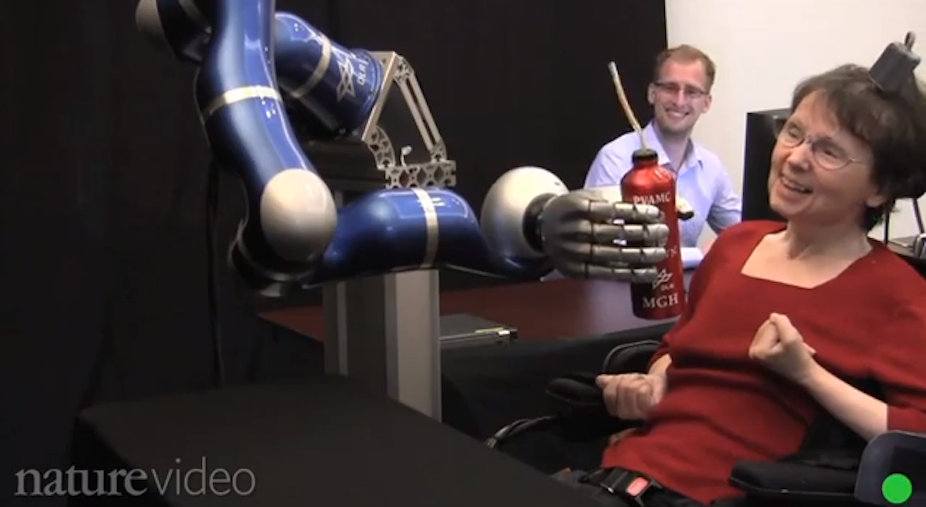

One of the participants, as you’ll see in the video below, was able to drink from a bottle using the robotic arm, something she had not been able to do with her own limb since a stroke nearly 15 years ago.

BMIs, also known as neural-interfacing systems, play a very important role in the advancement of the methods enabling humans to interact with and control a specific machine (such as a computer, a robotic arm, and so on).

Such interfaces can detect electrical signals from the brain in an invasive or non-invasive manner. The BrainGate system is an invasive technology that uses thin silicon electrodes surgically inserted a few millimetres into the primary motor cortex, a part of the brain that controls movements.

Non-invasive BMIs include those systems with electrodes attached on the surface of the skull. Although several techniques (such as electroencephalography (EEG)) can record signals from the brain in a non-invasive manner, it is generally thought electrodes positioned inside the brain convey more information.

Thought-processing

Brain signals acquired through either of the above techniques are then subjected to processing to remove the noise and any unwanted artifacts (the equivalent of static on your TV or radio) from the signal.

To decode movement intentions from neural activity, artificial intelligence models are then utilised to extract the most promising features – statistical descriptors of the brain signals – that discriminate between the signals related to the imaginations of different hand movements.

Finally, pattern-recognition algorithms trained with these features are employed to discriminate an intended hand-state based on the features extracted from the brain signals in real-time.

All the right moves

Although the robot’s movements reported by Hochberg and colleagues were not as fast or accurate as those of an able-bodied person, the participants successfully touched their target object (in this case some foam balls) on 49% to 95% of attempts. These findings were consistent across multiple sessions with two different robot designs.

What’s more, about two-thirds of successful reaches resulted in correct grasping. The authors further established the efficacy of brain control by one participant in the bottle-grasping and drinking task I mentioned earlier (see video above). This demonstrates that a neural-interface system can perform actions that are useful in daily life.

The results demonstrate the feasibility of this technology for use with people with tetraplegia (also known as quadriplegia). Years after injury to the central nervous system, BMIs are able to recreate useful, multidimensional control of complex devices directly from a small sample of neural signals.

Indirect action

In the context of existing research on the control of robotic arm movements for amputees and the disabled, there are two main classification schemes covering the approaches to interfacing the human brain with the external world.

These can be divided according to the method used to acquire the human intentions to perform a movement.

In the first scheme, the interface is implemented through an indirect link with the brain by utilising the human muscular activity, known as the Electromyogram (EMG). This forms a muscle-computer interface, as recently coined by Microsoft.

The EMG reflects the voluntary intentions of the central nervous system to contract a muscle (or group of muscles) and has been well studied and utilised in controlling robotic prosthetic devices. The vast majority of current research in this area is focused on the clinic applications of EMG-driven prosthetics.

But paralysis following spinal cord injury, brain stem stroke, amyotrophic lateral sclerosis and other disorders can disconnect the brain from the body. This eliminates the ability to perform volitional movements and can render the indirect approach useless.

In such a case there is a need for direct access to the brain signals.

Brain invasion

The progress achieved by Hochberg and colleagues with BrainGate falls within the second classification scheme, providing a direct link to the brain through the BMIs. This work paves the way for more advanced research for a variety of applications, including the control of robotic arms.

However, it should also be noted here that as well as the risks associated with surgery required to implant the BMIs, a disadvantage of such implants is the potential for scar tissue to form around the electrodes. This can result in a deterioration of signal quality over time.

Further efforts to understand, build, and control more powerful brain-signal-acquisition units will be crucial for widespread clinical application of neural-interface systems that can decode the intentions of the brain.

Perhaps the recently proposed wearable BMI known as Brainput by researchers from MIT (see image above) could be a future replacement to the invasive techniques.

This approach uses a non-invasive neuroimaging technology called functional near-infrared spectroscopy (fNIRS) to monitor the blood flow (blood oxygenation and volume) to infer human intentions.

As we move forward, the technology will become more sophisticated, and the results even more remarkable.