WHAT IS AUSTRALIA FOR? Australia is no longer small, remote or isolated. It’s time to ask What Is Australia For?, and to acknowledge the wealth of resources we have beyond mining. Over the next two weeks The Conversation, in conjunction with Griffith REVIEW, is publishing a series of provocations. Our authors are asking the big questions to encourage a robust national discussion about a new Australian identity that reflects our national, regional and global roles.

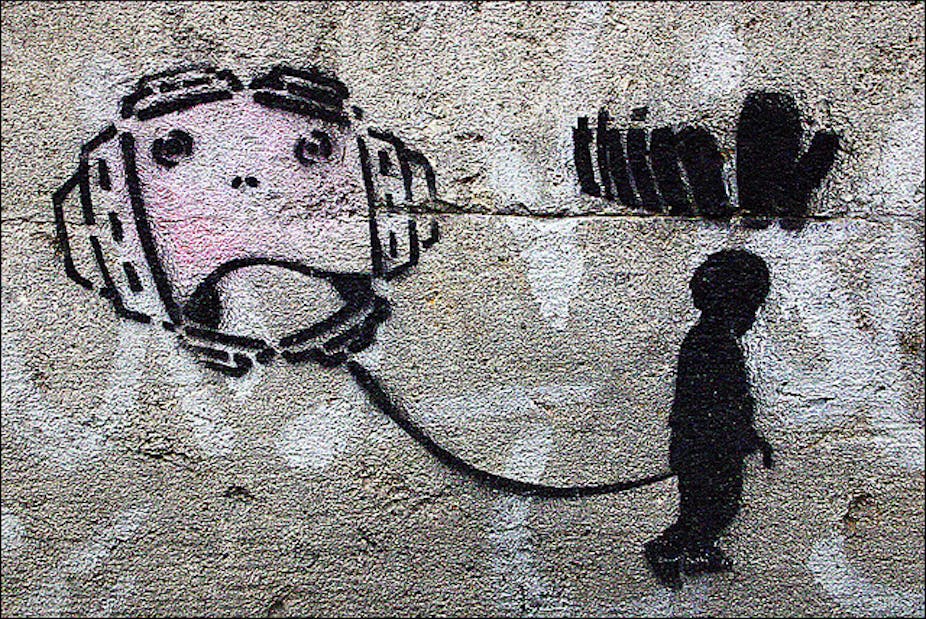

The highest hopes for Australia are based on our intellectual capacity. We already have a substantial profile in education and research, underpinned by a vigorous culture of independent debate which promotes original scientific ideas, as well as theory and analytical narrative in the humanities.

So Australia is good for thinking. But I wonder: is it good for research? When it comes to how we do research – which perhaps represents the pinnacle of thinking – what moral, creative and cultural leadership does Australian research management offer?

The criteria that the Australian Research Council uses for evaluating applications (itself mirrored in numerous other research selection and evaluation processes) presents a potential moral deficiency. A very large proportion of the ARC’s judgement is attributed to the applicant’s track record, prompting the question: is it fair?

Imagine an undergraduate marking rubric where 40% of the grade is attributed to the marks that you got in your previous essays. Throughout secondary and tertiary education, we scrupulously hold to the principle that the work of the student is judged without prejudice on the basis of the quality of the work. The idea that we might be influenced by the student’s grade point average is preposterous.

Research managers would argue that grant processes are not about assessing research but assessing a proposal for future research. Proposals are funded on the basis of past research – which is reckoned to be predictive – as well as ideas for new work; and this prospective element makes it more analogous to a scholarship, which is decided on the basis of past scores in undergraduate performance.

But the problem with this logic is that each of those past scores from school to honours is established on the basis of fully independent evaluations (where at no stage is past performance counted), whereas many of the metrics used in research have a dirty component of past evaluations contaminating fresh judgements.

Another angle might be to compare research grants with employment. As with a selection process for appointing applicants to an academic post, we are happy to aggregate the judgement of others in previous evaluations; we assiduously examine the CV and we assume that previous judgements were independent in the first place.

But a good selection panel will take the track record with due scepticism; after all, dull and uncreative souls could walk through the door with a great track record. If the selection panel is earnest about employing the best applicant, its members will read the papers or books or musical scores or whatever the applicant claims to have done, irrespective of where they are published, on the principle that you cannot judge a book by its cover.

The only reason that research panels attribute 40% weighting to track record is so as not to have to make a fully independent evaluation and take responsibility for it. But if, as an art critic, I relied on track record for even 10% of my judgement, I would be considered incompetent and ineligible for the job. It would be professionally derelict to stand in front of an artwork and allow my perception to be swayed by the artist’s CV. My judgement must absolutely not defer to anyone else’s, even to a small percentage.

My concern is not with the ARC, which is no worse than other funding bodies. My concern is with research management as an arbitrary code across Australian institutions, which is less than creative and open to moral questions. The fortune of institutions is understandably tied to their research. But how do we know what research is encouraged or discouraged?

How do we count research – which has been the subject of Excellence in Research for Australia (ERA) – when the measure is likely to dictate research production and promote research in its image? Sadly, while the ERA had the potential to realise an unprejudiced and independent evaluation exercise, it adopted the prior evaluation dependency which characterises most processes in research management. In 2010 and 2012, the ERA evaluations were informed, among other things, by “Indicators of research quality” and “Indicators of research volume and activity”. Amazingly, research income featured in both of these measures. Even volume and activity are measured by income.

Research income is the major driver for institutional funding and is a key indicator in various league tables. Research income is also used to determine all kinds of benefits, such as Research Training Scheme places and scholarships for research graduates. So here is the same problem again. We judge merit by a deferred evaluation, in this case according to the grants that the research has been able to attract. It entrenches past judgement on criteria which may be fair or relatively arbitrary.

The grant metric is applied in various contexts with little inflection beyond benchmarking according to disciplines. In any given field, academics are routinely berated for not attracting research funding, even when they do not need it. They are reproached for not pursuing aggressively whatever funds might be available in the discipline and which their competitors have secured instead. As a result, their research, however prolific or original in its output, is deemed to be less competitive than the work of scholars who have gained grants. So their chances at promotion (or even, sometimes, job retention) are slimmer. Such scholars live, effectively, in a long research shadow, punished for their failure to get funding, even when the intellectual incentives to do so are absent.

Directing a scholar’s research by these measures might be suspected of being not only somewhat illogical but immoral. On average, the institution already directs more than a third of the salary of a teaching-and-research appointment toward research. That percentage should be enough to write learned articles and books, if that is the kind of research that a scholar does.

In certain fields, the only reason one might want a grant would be to avoid teaching or administration. But most good researchers enjoy teaching and think of it as immensely rewarding, a nexus which, in any other circumstance, we should be trying to cultivate.

To get out of administrative duties may be more admirable; however, even a $30,000 grant entails considerable administration, and with larger grants there is more employment, and thus more administrative work. You end up with more paperwork, not less, if you win a grant. The incentives to gain a grant are much less conspicuous than the agonies of preparing the applications, which tie the researcher into a manipulative game with little intrinsic reward and a great likelihood of failure and even humiliation by cantankerous competitive peers.

Because the natural incentives are absent, the unwilling academic has to be compelled by targets put into some managerial performance development instrument, where the need for achieving a grant is officially established and the scholar’s progress toward gaining it is monitored.

As a means of wasting time, this process has few equivalents; but if it were only wasteful, we could dismiss it as merely a clumsy bureaucratic incumbency that arises in any institution that has policies. But after a long period of witnessing the consequences (formerly as one of those academic managers) I suspect this wasteful system may also be morally dubious, because its inefficiencies are so institutionalised as to disadvantage researchers who are honourably efficient.

As a measure of the prowess of research, research income has a corrosive effect on the confidence of whole areas and academics who, for one reason or another, are unlikely to score grants. Research income is a fetishised figure – it is a number without a denominator. If I want to judge a heater, I do not just measure the energy that it consumes but the output that it generates as well; because these two figures stand in a telling relationship to one another: the one figure can become the denominator of the other to yield a further figure representing its efficiency.

To pursue this analogy, research management examines the heater by adding (or possibly multiplying) the input and the output. In search of a denominator, it then asks how many people own the heater and bask in its warmth. Similarly, we find out how many people generated the aggregated income and output. Sure enough, we attribute the research to people. But the figure is structurally proportional to income and therefore does not measure efficiency.

I question the moral basis of this wilful disregard for efficiency. Research management does not want to reward research efficiency and refuses to recognise this concept throughout the system. For instance, the scholar who produces a learned book or several articles every two years using nothing but salary is more efficient than another scholar who produces similar output with the aid of a grant.

If, suddenly, research efficiency became a factor in the formula – do not hold your breath – institutions would instantly scramble to revise all their performance management instruments. Not because it is right but because there seems to be no moral dimension to research management, only a reflex response to any arbitrary metric set by a capricious king. Individual cells of research management will do not what is right for research and knowledge and the betterment of the human or planetary condition but whatever achieves a higher ranking for their host institutions.

It is commonly believed that research income as an indicator of quality is at least an economical metric, if not always fair. We tend to view such matters in a pragmatic spirit because we cannot see them in an ethical spirit. On the quality of funded research, I am personally agnostic because, when all is said and done, there is no basis for faith. There may be a strong link between research income and research quality, or there may be a weak or even inverse link, depending on the discipline and, above all, how we judge it.

Perhaps, being circumspect, one could say research management is not immoral, so much as amoral, in the sense that it may be free of ethical judgement. But any argument to unburden the field from moral judgement is not persuasive. Research management is never in a position where it can be amoral, because it concerns the distribution of assets that favour and yield advantages, and being outside the sphere of moral judgement is not an option.

It is good that we have research grants, because they allow research – especially expensive research – to prosper more than it otherwise would; but the terms of managing research, which rely so heavily on a chain of deferred judgements and which yield invidious and illogical rankings, involve processes of dubious moral assumptions. We can accept that research management is inexact and messy. None of that makes it ugly or immoral, just patchy and occasionally wrong. But the structural problems with research management go further; they skew research and damage the academic psyche.

Lecturers commence their academic career as researchers and, from early days, are researchers at heart. They love research: they become staff by virtue of doing a research degree and are cultivated thanks to their research potential and enthusiasm. Bit by bit, and with many ups and downs, they divide into winners and losers: a small proportion of researchers who achieve prestigious grants and a larger proportion who resolve to continue with their research plans on the basis of salary, perhaps with participation in other workers’ funded projects and perhaps with a feeling of inadequacy, in spite of their publications, sometimes promoted by pressure from their supervisor.

Within this stressful scenario, even the successful suffer anxiety; and for the demographic as a whole, the dead hand of research management makes them anxious about their performance. In relatively few years, academics become scared of research and see it as more threatening than joyful; they pursue it with an oppressive sense of their shortcomings, where their progress is measured by artificial criteria devised to make them unsettled and hungry.

Though we dress up this negotiation in the language of encouragement, it is structurally an abusive power relationship that demoralises too many good souls in too little time. It is not as if we do not know about this attrition of spirit, that many academics get exhausted and opt out of research with compound frustration for good reasons.

Research management, which governs the innovative thinking of science and the humanities, is neither scientific nor humane nor innovative; and my question, putting all of this together, is whether or not it can be considered moral or in any way progressive to match the hopes that we have in research itself. A system of grants, however arbitrary, is not immoral on its own, provided that it is not coupled to other conditions that affect a scholar’s career.

This process of uncoupling research evaluation from grant income on the one hand and future intellectual opportunities on the other seems necessary to its moral probity. Is it ethically proper to continue rating researchers by their grant income simply because it is convenient in yielding a metric for research evaluation? The crusade to evaluate research has been conducted on a peremptory basis, either heedless of its damaging consequences or smug in the bossy persuasion that greater hunger will make Australian research more internationally competitive.

Is such a system, so ingeniously contrived to spoil the spirits of so many researchers, likely to enhance Australia’s competitiveness? We were told at the beginning of the research evaluation exercise that the public has a right to know that the research it funds is excellent. But after so many formerly noble institutions have debased themselves by manipulating their data sets toward a flattering figure, we have no more assurance of quality than we did before evaluating it.

The conspicuous public attitude to research is respect and admiration, bordering on deference. So I wonder if there is any justifiable basis for research evaluation other than to provide the illusion of managerialism, or perhaps a misguided ideology that identifies hunger and anxiety as promoting productivity? I see massive disadvantages in our systems of evaluation but fail to see any advantages.

To maintain this disenfranchising system in the knowledge of its withering effect strikes me as morally unhappy and spiritually destructive. It would take a diabolical imagination to come up with a system better contrived to wreck the spirits of so many good researchers and dishearten them with their own achievement

The system needs to be rebuilt from the ground up and on the principle that dignifies the generosity and efficiency of researchers. I look forward to a time when the faith that the public has in our research is matched by the faith that researchers themselves have in the structures that manage them.

Read more provocations at The Conversation and at Griffith REVIEW.